Senior Developer in 24 hours

In the realm of technology, the path to becoming a senior software developer is a journey marked by continuous learning, problem-solving prowess, and a relentless pursuit of excellence. This book serves as your compass in navigating the intricate landscapes of software development, offering a comprehensive guide to acquiring the skills that elevate you to the coveted position of a senior developer.

Embark on a transformative odyssey as you delve into the nuances of the software developement lifecycle, learn to decipher complex problems, and cultivate the art of collaboration within development teams. With real-world examples and hands-on exercises, this book not only equips you with the technical acumen required for senior roles but also delves into the soft skills and leadership qualities that distinguish exceptional developers. Whether you're a budding programmer seeking to ascend the ranks or an experienced developer aiming to stay at the top of your game, this guide is your roadmap to mastering the skill set of arguably the most trascendental occupation of the information age: software developer.

What Makes a Senior Software Developer?

In the world of software development, a Senior Software Developer is the core of the team. The people who can truly fill the role of a Senior Software Developer are few, and as such, in extremely high demand. This book is an attempt to condense the skills and traits required to make the jump from a Junior Software Developer to a Senior Software Developer. But the first step is to define what we mean by Senior Software Developer and Junior Software Developer.

Junior Software Developers are normally entry-level software developers who assist the development team with all aspects of the software development lifecycle. However, their primary role is to write code and fix bugs, normally based on well-defined tickets and bug reports. The day-to-day of a Junior Software Developer normally consists of picking up (or being assigned) a particular bug, working on it until completion, and then picking up the next bug. Besides tackling tickets, Junior Developers will become intimate with parts of the codebase, making their input valuable in other areas of the design and development process.

Senior Software Developers will spend a lot of their time writing code and closing tickets, but the value they provide goes far beyond. Senior Developers are a force multiplier that will enable Junior Developers to operate at their full capacity. In this book we are going to focus on how we can become enable the creation of better software, a skill above just writing better code.

What a Senior Software Developer is not?

Some characteristics and traits are associated with a Senior Software Developer but do not contribute directly to the effectiveness of a Senior Software Developer. For example:

Years of experience: Many times organizations, and especially Human Resource departments and recruiters, equate a lengthy tenure with a Senior position. While having multiple years of experience is typical for Senior Developers, just having those years does not provide on its own the skills that a Senior Software Developer should bring to the table.

Writing code the fastest: A Senior Developer should be able to solve problems faster, but that doesn't necessarily mean that they write raw code faster. Senior Developers are better at understanding what code should be written, what code or libraries can be reused, and what code shouldn't be written in the first place.

Intimate knowledge of the company's codebase: While many Senior Developers will have intimate knowledge of the codebase that they are working on, it is not in itself a hard requirement. One of the attributes that Senior Developers bring is the ability to quickly learn their way around a new codebase.

Junior Software Developer vs. Senior Software Developer

To plot our path from Junior Software Developer to Senior Software Developer, it's vital to understand the difference between the two. Or at the very least understand the basic role of Junior Software Developers.

Junior Developers follow a very simple workflow, where they pick up a ticket, work on the ticket until it's done, submmit their changes, and then pick up a new ticket to start the process all over again. Senior Software Developers will also work on tickets but also need to keep a more holistic view of the system. This holistic view includes:

- Gathering and grooming requirements

- Ensuring functional and non-functional requirements are met

- Having an opinionated take on whether code should be written, or if alternative solutions should be used

- Understanding the end-to-end Software Development Lifecycle (SDLC)

We'll go into a lot more detail in the next chapters, but we can summarize the main differences between Junior and Senior Developers in the following table:

| Junior | Senior |

|---|---|

| Focused on tickets | Holistic view of application |

| Functional Requirements | Functional and non Functional Requirements |

| Writes code | Should we even write this code? Can we remove this code? |

| Limited view of SDLC | End-to-end view of SDLC |

Architect vs. Senior Software Developer

Another persona that is often seen in the higher ranks of software development organizations is the Architect. Normally prefaced by even more enthusiastic terms such as "Enterprise" or "Solution", the Software Architect is another potential career development path for Software Developers.

Architects normally work at the organizational level, keeping a holistic view of the system, rather than focusing on an individual component level. Sr. Software Developers will focus more on their component, while also keeping an eye out to understand how it fits within the bigger picture.

Architects work mostly at the development organization level, while Senior Software Engineers work more at the team level. This means that in an organization, you will find many more Senior Software Engineers with only a few Architects.

The deliverables produced by Architects are more theoretical, think UML diagrams, design documentation, standards, and occasionally some proof of concept code. On the other hand, Senior Software Engineers will produce a lot more code, with some supporting documentation (design diagrams, standards, etc.).

It is worth noting that in other cases Enterprise Architects or Solution Architects are part of the sales organization, and as such their job is more aligned with architecting "Sales Proposals" or "RFP (Request For Proposals) Responses" rather than architecting the actual system that will be built.

Both the Software Architect and the Senior Software Developer must be thought leaders in the organization and must collaborate to bring about the vision of the organization. An architect who proposes a design that the software developer can't deliver is not contributing to the fulfillment of this vision. In the same manner, a software developer who works without understanding how their components fit in the overall organization is not contributing value to the organization.

We can summarize the main differences between an Enterprise Architect and a Senior Developer in the following table:

| Architect | Senior Developer |

|---|---|

| Holistic view of system | Deep knowledge of a component |

| Concerned about non Functional Requirements | Concerned about Functional and non Functional Requirements |

| Organization level | Team level |

Backend vs Front-end Development

It is also important to note that for the scope of this book, we're going to be focusing on backend development. While some of the topics covered in the book might apply to front-end development, the ecosystem is different enough that to achieve proficiency in either field, true specialization is required.

About the Author

Andrés Olarte has tackled multiple changes in IT, from working as a systems adminstrator, to Java Development of enterprise applications, to working as a consultant helping customer develop cloud native application.

Currently, Andrés works as a Strategic Cloud Engineer for the professional services organization at Google Cloud.

Andrés loves learning new things and sharing the knowledge. He has spoken at multiple events, such as "Chicago Java Users Group".

Andrés writes at his personal blog The Java Process.

About this Book

The book is structured around 24 chapters. Each chapter covers one topic in depth, and should be readable in one hour or less. Depending on your previously experience and knowledge, you might want to branch out and seek more information on any particular topic. Each chapter will provide a concise list of the tools that are referenced.

As part of the process of condensing the information and techniques for this book, a series of videos discussing the topics were created. The videos will listed within each chapter.

Who is the target audience of this book?

This book is geared towards "entry-level" or "junior" software developers, that want to grow their careers. If you already have the basic knowledge of how to write code, the material here will help you gain the skills to move up in your career.

In this book, we skip over a lot of basic topics, to focus on the areas where a Senior Software Developer can provide value. In this book, we are not trying to replace the myriad of coding boot camps or beginner tutorials that are out there, but rather provide a structured set of next steps to advance in your career. We also skip a formal review of Computer Science focused topics. There are plenty of sources for Computer Science theory, including preparation for CS-heavy interview questions that are popular in some companies.

If you are already a Senior Software Developer, you might find the material useful as a reference, as we provide opinionated solutions to common problems that are found in the field.

Decisions, decisions, decisions

One of the main roles of a Senior Software Developer is to make decisions.

When we hear "decision maker", we normally think of a high level executive,

however software developers make tens if not hundreds of decisions every day.

Some of these decisions might be small, but some might be very impactful.

A bad decision when choosing a timeout could cause a mission critical system to fail,

and a bad decision regarding our concurrency logic could limit how much the operations of the business can scale.

In a large business, one of those bad decisions could have a price tag of thousands or millions of dollars.

For example, in 2012, Knight Capital Americas LLC,

a global financial services firm engaging in market making, experienced a bug.

This bug caused a significant error in the operation of its automated routing system for equity orders, known as

SMARS1. Due to a misconfigured feature flag, SMARS routed millions of orders into the market over a 45-minute period.

The incident cause a loss of $450 million. 2

The decisions a software developer makes are very important, and we always must take into consideration three aspects:

- The problem at hand

- The technologies available

- The context (from a technical perspective, but also from an organizational perspective)

Some of the decisions we can take on our own, for example: "How should we name a variable?" Other decisions will require consulting other teammates or external stakeholders. For larger decisions that impact the overal architecture of a solution, we might even have to negotiate to reach a concensus. The more ramifications a decision has outside our application, the more we'll have to involve other parties.

Whatever the decision, it's important to always keep in mind the context in which we're taking the decision. Many of the decisions we discuss here, might have already be defined in a large organization, and if we need to go against those standards, we need to have a very good reason to justify it.

Which brings us to another point to keep in mind: pick your battles. When trying to defend our point of view against someone with an oposing view, it's important to weigh if there's value in prevailing, while potentially spending a lot of time or burning political capital. Sometimes it's better to concede against a differing opinion, when there's marginal to choosing either alternative.

We can start by clasifying decisions into Type 1, which are not easy to reverse or non-reversable at all; or Type 2 decision which are easy to reverse if needed.

In an Amazon shareholder letter, CEO Jeff Bezos explained how we need to fight the tendency to apply apply a heavy decision making to decision that don't merit that much thought:

Some decisions are consequential and irreversible or nearly irreversible – one-way doors – and these decisions must be made methodically, carefully, slowly, with great deliberation and consultation. If you walk through and don’t like what you see on the other side, you can’t get back to where you were before. We can call these Type 1 decisions. But most decisions aren’t like that – they are changeable, reversible – they’re two-way doors. If you’ve made a suboptimal Type 2 decision, you don’t have to live with the consequences for that long. You can reopen the door and go back through. Type 2 decisions can and should be made quickly by high judgment individuals or small groups.

As organizations get larger, there seems to be a tendency to use the heavy-weight Type 1 decision-making process on most decisions, including many Type 2 decisions. The end result of this is slowness, unthoughtful risk aversion, failure to experiment sufficiently, and consequently diminished invention. We’ll have to figure out how to fight that tendency.

-- Jeff Bezos 3

With that said, it's important to understand in which decisions, we must apply a heavy and involved decision making process. Discussions can always be valuable to have, but must always be respectful.

We need to be ready to defend our decisions, so we need to think about the different trade-offs, as well as the context in which we're taking these decisions.

Throughout the book, key decision points will be higlighted in clearly marked boxes:

Tech Stacks

Most of this book covers material that is independent of a particular tech stack. However, when it comes to practical examples, we focus on three tech stacks that are popular for backend development:

Other languages will be mentioned if they are relevant to a particular topic.

Introduction Video

1 "ORDER INSTITUTING ADMINISTRATIVE AND CEASE-AND-DESIST PROCEEDINGS,". Securities and Exchange Commission, 2013, https://www.sec.gov/files/litigation/admin/2013/34-70694.pdf

2 Popper, N. (2012, August 2). Knight Capital says trading glitch cost it $440 million. The New York Times. https://archive.nytimes.com/dealbook.nytimes.com/2012/08/02/knight-capital-says-trading-mishap-cost-it-440-million/

3 "LETTER TO SHAREHOLDERS,". Jeffrey P. Bezos, 2015, https://www.sec.gov/Archives/edgar/data/1018724/000119312516530910/d168744dex991.htm

From User Requirements to Actionable Tickets

Why is This Important?

When people think of software developers, they think of someone who writes code all day long. While writing code is one of the main tasks of a developer, many developers spend lots of time on other activities. These activities enable us to get to the point where we can write the code, or make sure that the code that we write is useful. In most teams, it is up to the Senior Developers to guide these activities to ensure that all of the developers have clear and actionable tickets.

In this chapter, we cover how can go from vague or abstract requirements to actionable tickets, by breaking down the process into several stages.

The Work Cycle of a Developer

As we mentioned in the introduction most software developers are used to a fairly simple work cycle in their day-to-day:

However, this assumes that the tickets have already been created. The person in charge of creating the tickets will vary from team to team. But in most teams, the Sr. Developers will at least have an active role in defining the scope of these tickets, and how large chunks of work will be divided into digestible tickets. At most, the Sr. Developer will have an active role in identifying and analyzing the user requirements. In this chapter, we will cover how we can create better tickets starting from raw user requirements.

Using a Structured Approach

Going from user requirements to actionable tickets can seem like a daunting endeavor. And like any big endeavor, it helps to break down the process into a structured approach that we can follow step by step.

The proposed approach can be seen in the diagram below:

Some of these terms can have different meanings depending on the context, so we'll define a common language that we'll be using throughout the book. Your organization or team might use slightly different meanings, so it's important to take the context into account.

- User Requirements: requirements set by the end user.

- User Journey: a set of steps a user takes to accomplish a goal.

- Epic: a large body of work with a unifying theme.

- Ticket: the smallest unit of work a developer undertakes.

Keep in mind that going from user requirements to actionable tickets is more an art than a science. The process also requires negotiation to determine what is technically feasible and valuable based on cost/benefit analysis. Not every feature request can be delivered within a reasonable timeframe or with limited resources. A Senior Developer must be willing to listen to other stakeholders and negotiate a lot of ambiguity to distill the high-quality tickets on top of which successful software is built.

User Requirements

User requirements are, as the name implies, the requirements set by the end user. Alternatively, they can be the requirements set by someone else on behalf of the user, sometimes with limited input from the user.

In general, who provides the user requirements?

- Users

- Product Owners

- Business Analysts

- Q/A Team

In cases where the user requirements are provided by someone other than the actual end user, how do we gather data to ensure we're really representing what the user wants? Many techniques exist to accomplish this goal:

- Surveys

- Interviews or focus groups with users

- User Observation

- Usage Data Collection

Other times, the features ideated in brainstorming sessions, where subject matter experts put together their collective creativity to come up with the great next innovation.

Sometimes the requirements we receive as a Software Developer are very detailed. Other times we will get very abstract or vague requirements, that read more like a wishlist.

In the worst case, we will receive non-actionable user requirements. For example:

- We want to improve the application

- We want our users to be more engaged

- We want our applications to be easier to use

In cases where we get these non-actionable requirements, we need to push back and either get more information or discard requirements that won't be translatable into any tangible work. As developers, we use our code to achieve goals, but our code can't bridge gaps in the vision of an organization. These user requirements must be identified early in the process and sent back or discarded.

User Journeys

Mapping user journeys is best done in sessions with representatives from the different areas that are affected by a particular application or piece of functionality.

This can be done in a whiteboard, to allow a more inclusive environment and provide a more holistic view of the different requirements and how they relate to each other.

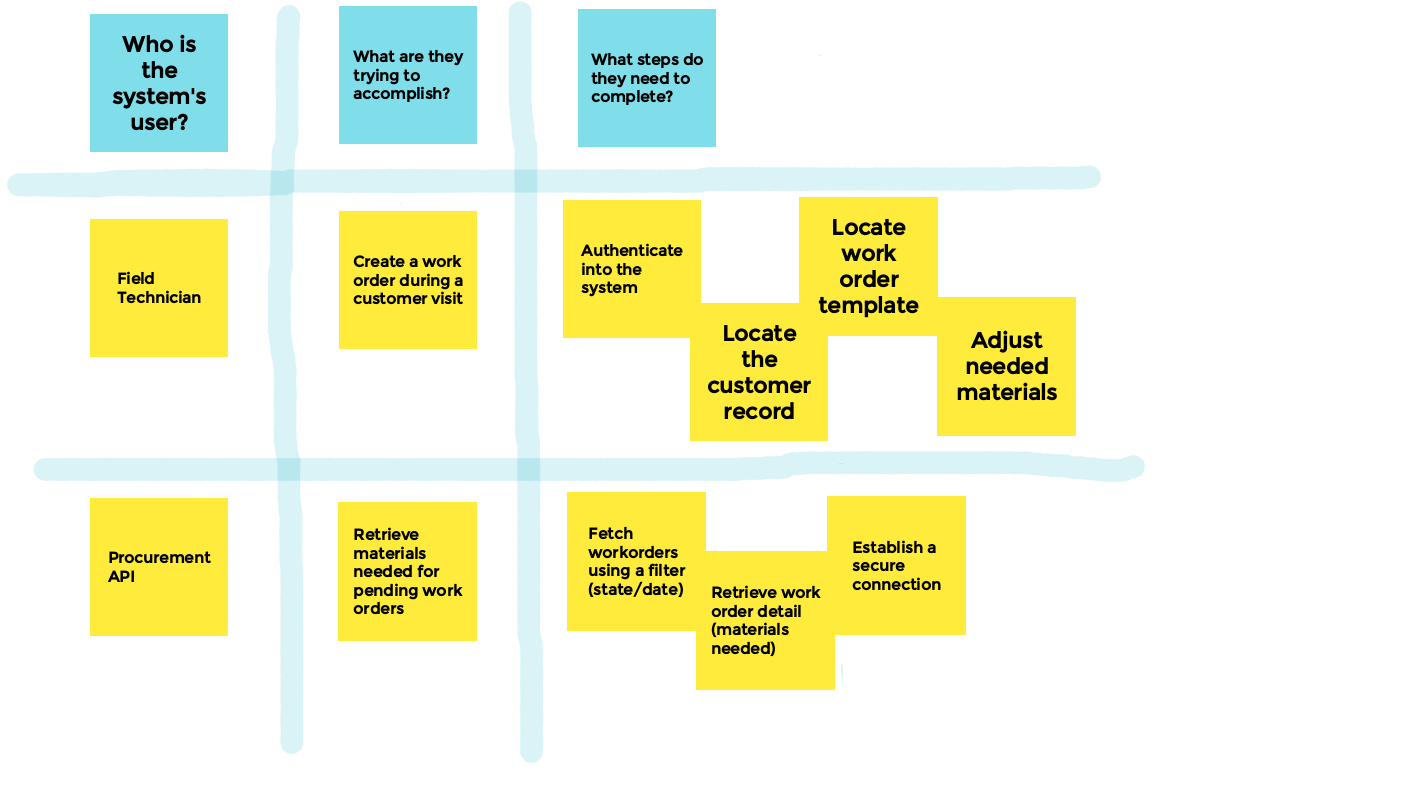

In these sessions, our objective is to document three critical pieces of information for each requirement:

- Who? Who is the user that is acting?

- What? What is the user trying to accomplish?

- How? How is the user going to achieve their goal?

Brainstorming will normally result in a series of users, goals, and steps. At the brainstorming phase, it's important to try to capture all of the proposed ideas, they can be refined at a later stage.

Once there are enough users, goals, and steps on the board, the next step is to organize them. Group together the ones that share common themes, and combine redundant ones.

Once the users, goals, and sets of steps are organized, and depending on the number of ideas up on the board, this might be a good time to take some time and vote for the ones that the team feels are most valuable.

If there's a feeling that ideas are still not properly represented on the board, you can repeat the brainstorming process until the group is satisfied.

Documenting User Journeys

Once some level of consensus has been achieved, it's important to document the user journeys in a non-ambiguous manner. Remember that one of the critical objectives of a user journey mapping session is to create a shared understanding of the user requirements. Even if there are detractors regarding a particular user journey, it's valuable to have a shared understanding. Objections can be better addressed when the specifics are clear to all parties involved.

Both activity diagrams and sequence diagrams can be used to show actions and relationships.

Activity diagrams show the order or flow of operations in a system, while a sequence diagram shows how objects collaborate and focuses on the order or time in which they happen. Generally, sequence diagrams can better illustrate complicated use cases where asynchronous operations take place.

Here we see an example sequence diagram:

Sequence diagrams can be stored as code. Storing diagrams as code makes it easier to share across the team and iterate. Someone with experience can quickly edit sequence diagrams on the fly, helping visualize the flow in real-time as a group of subject matter experts watches.

@startuml

actor "Actor" as Act1

participant "Component" as App

Act1 -> App : Message

App --> Act1: Response

Act1 ->> App : Async Message

App --> Act1: Async Response

loop while done!=true

Act1 -> App: Message

App --> Act1: Response

end

@enduml

Example sequence diagram:

Remember each user journey should tell us:

- Who is the user?

- What are they trying to accomplish?

- What steps do they need to complete?

At this point, you might have a lot of User Journeys. The next step would be to improve the focus by ranking them based on their relative importance to the organization. The ranking can be done by having the SMEs vote on their priorities, or maybe there's a product owner who has the responsibility to set priorities.

Creating Epics

Epics are a large body of work with a unifying theme. In this section, we will focus on epics that are derived from the functionality defined in user journeys, to continue the series of steps to go from user requirements to actionable tickets.

However, in the real world, epics might be defined differently and have different themes. For example, could be an Epic to encompass the work needed to make a release on a particular date, or an epic to remediate a shortcoming in an existing piece of software.

When talking about epics, we have to understand the context in which we are going to be developing the functionality described in an epic.

For example, is our software greenfield or brownfield?

- Greenfield: A project that starts from scratch and is not constrained by existing infrastructure or the need to integrate with legacy systems.

- Brownfield: Work on an existing application, or work on a new application that is severely constrained by how it has to integrate with legacy systems or infrastructure.

Is the software in active development, or just under maintenance?

- Active Development: A project where we're adding new features is considered in active development. These new features can be added either as part of the initial development or subsequent enhancements added after going into production. It is expected that new features will provide new value.

- Maintenance: A project where no new features are being added is considered to be in maintenance. Changes to software under maintenance are limited to bug fixes, security updates, changes to adhere to changing regulations, or the minimum changes needed to keep up with changing upstream or downstream dependencies. No new value is expected to be gained from the maintenance, but rather just a need to remain operational.

Based on the work we did documenting user journeys, we can use these user journeys as the basis for our epics. We can start creating one epic per user journey. Normally an epic will have enough scope to showcase a discreet piece of functionality, more than enough to create a sizeable number of tickets. As we work on planning or creating the tickets, it will become evident that there is overlap across some of the epics. It's important to identify these overlaps and extract them into standalone epics (or tickets as part of existing epics) The disconnect between how we construct user journeys, and how the software has to be built will always result in dependencies we have to manage.

For example, if we had an epic to create a new work order, they might look something like this:

- Epic: A Field Tech needs to create a new work order

- Ticket: Create authentication endpoint

- Ticket: Create an endpoint to search for customers

- Ticket: Create an endpoint to search work order templates

- Ticket: Create an endpoint to adjust materials

- Epic: A Customer Service Rep needs to create an appointment

- Ticket: Create an authentication endpoint

- Ticket: Create an endpoint to search for field techs

- Ticket: Create an endpoint to create an appointment record

It is evident that the first ticket "Create authentication endpoint" is a cross-cutting concern that both epics depend on, but is not limited the either one. And most importantly, we don't want to end up with two different authentication endpoints!

A holistic view is critical to effectively slice up the work into epics and tickets.

If we rearrange the epics and tickets, we can have better-defined epics:

- Epic: A Field Tech needs to create a new work order

Ticket: Create authentication endpoint- Ticket: Create an endpoint to search for customers

- Ticket: Create an endpoint to search work order templates

- Ticket: Create an endpoint to adjust materials

- Epic: A Customer Service Rep needs to create an appointment

Ticket: Create an authentication endpoint- Ticket: Create an endpoint to search for field techs

- Ticket: Create an endpoint to create an appointment record

- Epic: Provide an authentication mechanism

- Ticket: Create an authentication endpoint

Furthermore, we can then add more detail to the epics as we start thinking about the implementation details that might not be fully covered in our user journeys. For example, this might be a good time to add a ticket to manage the users:

- Epic: Provide an authentication mechanism

- Ticket: Create an authentication endpoint

- Ticket: Create user management functionality

There is a dependence on the authentication mechanism. Adjusting for these dependencies is an ever-present challenge. Rather than trying to perfectly align everything in a Gantt Chart to deal with dependencies, we provide a more agile approach in Chapter 4.

- Which epics do we need to create for each Critical User Journeys?

- Do any cross cutting epics need to be created?

- Which tickets will we create for each epic?

Creating Tickets

In the previous section, we have already started talking about creating tickets, in particular, what tickets to create. So in this section, we're going to focus on how the tickets should be created.

In general, a good ticket should have the following attributes:

- Define concrete functionality that can be tested

- Have a completion criteria

- Achievable in a single sprint

The ticket should have enough information describing a concrete piece of functionality. At this stage, the ambiguous and vague ideas that were brainstormed during the user journey brainstorming have to be fleshed out into detailed requirements that can be implemented with code.

As part of the definition of this concrete functionality, we can include:

- Any specifications that must be implemented, for example, API specifications already agreed upon.

- Sample scenarios or test cases that the functionality must be able to pass. These can be used with Behaviour Driven Development.

- Sample data that the functionality will process.

- Detailed diagrams or pseudo-code

- Detailed explanation of the desired functionality

The scope of a single ticket should be testable. At the early stages of development, the testing might be limited to unit testing, as we might depend on external components that are not yet available. For some of the very simple tickets, "testable" might mean something very simple. For example visually verifying that a label has been updated to fix a typo, or that a new button is visible even though there is no logic is attached to the button. To ensure that the scope of the ticket is enough to stand on its own, there should be concrete completion criteria.

To define an actionable completion criteria, you can reference back to the actions that were defined as part of the user journey.

For example, a good completion criteria would look like this:

- Must be accessible at

/customersas aGEToperation. - Must take an arbitrary string as a parameter

q. - Must return any customer records that match the text from the query.

- Matches against all fields in the customer record.

- If no matches, return an empty set.

- Response data format:

{"results": [...]}

Putting it all together an actionable ticket would look like this:

Title: Create endpoint to search for customers

Description:

Create

GETendpoint at/customersthat:

- Is accessible by field techs

- Takes an arbitrary string as parameter "

q"- Matches the string against all fields in customer records

- Returns the list of matching records

- If no matches, return an empty set

- Response data format:

{ "results": [ { "customer_id": "xxx", "first_name": "John", "last_name": "Doe", "email": "john@test.com", "phone": "555-5555" } ] }

A ticket that has a scope that is too limited is problematic because it can't be tested on its own. A ticket that is too big is also problematic. Tickets should be completable in a single sprint. How long a spring is, depends on your team, but is normally one or two weeks. At creation time, it is always hard to estimate the effort level of a ticket, which is why many teams have team meetings to estimate the level of effort (or "story points" in Agile lingo). These meetings depend on tickets already being created, which means that someone must make an initial decision regarding how big each ticket should be.

Developers should feel empowered to take any ticket assigned to them, and if they feel it's too big, break it down into more manageable tickets. However, if we're creating the tickets, we should take care to avoid creating tickets that are too big to start with.

- What information do we need to provide for each ticket to ensure it is implementable and testable?

- Does any of the tickets need to be broken up to make it completable in a single sprint?

From Bug Reports to Tickets

Sometimes tickets are not created in the process of adding new features to a piece of software. Sometimes tickets are created through another path, via bug reports. Bug reports are reactive, as they signal a condition that the development team had not detected during the normal development process. Bug reports can come at different times during the development process, as they are discovered at unpredictable times. For example, they can be detected by the Q&A team when they are testing a feature before launching it. Bugs can also be detected by the end users once an application has already been launched into production. Furthermore, bugs are sometimes detected by developers, as they're working on unrelated features.

In Chapter 5 and Chapter 6 we go into detail on how to write good unit and integration tests to limit the number of bugs that make it to the Q&A team or our users, but regardless of how good our unit tests are, we must be able to receive, triage, and act upon bug reports. From a high enough viewpoint, bug reports are just tickets that must be prioritized and addressed like any other ticket. Depending on the severity of the bug, the bug might upend our previous priorities and demand immediate action.

Depending on the mechanisms we have for reporting bug reports, tickets might be separate from bug reports.

Given the potentially disruptive effects of bug reports, we must have guidelines and mechanisms to ensure the tickets we generate from bug reports are of high quality. A high-quality bug report will serve to main purposes:

- Enable the developer to reproduce the issue.

- Help establish the severity of the bug, triage it, and prioritize it properly.

As Senior Developers, it is part of our responsibility to ensure that we get enough information to fulfill these two requirements.

A ticket to address a bug report should have at a minimum the following information:

- Title and Summary

- Expected vs. Actual result

- Steps to Reproduce

- Environment

- Reference information

- Logs, screenshots, URLs, etc.

- Severity, impact, and priority

Some of these pieces of data will come from the bug reports directly, while others might have to be compiled externally. For example, we might have to retrieve relevant logs and attach them to the ticket. If we have multiple multiple bug reports, it might be necessary to correlate and condense them to determine the severity and impact of a particular bug. A single ticket should be able to be linked to one or more related bug reports. This is easily supported by most ticket/issue tracking software.

In cases where bug reports are separate from tickets, it's important to always keep a link to be able to reference the original bug report (or bug reports). Maintaining this link will help developers reference the source of the bug in case there are any questions.

In the same vein, some of the information might have to be edited to adapt to an actionable ticket, for example by making the title and summary concise and descriptive.

We must make it easy for our users to generate high-quality bug reports. Making it easy for our users, will make it easier for us to manage these bug reports and promptly address any underlying issue.

For example, we create templates in our issue or ticket tracking software to ensure all of the proper fields are populated.

Specific templates can be developed for different components or services, to ensure we capture the relevant information.

This reduces the need to go back and ask for more data from the bug reporter.

If at all possible, embedding the bug report functionality as part of the application itself makes it easier for users to report their bugs, while at the same time having the opportunity to collect (with the consent of the user) diagnostic information and logs that will help to debug the issue.

Depending on the application, it is possible to create a unique identification number that allows us to track a transaction throughout our systems. This unique transaction ID can then be correlated to log messages. Capturing this ID as part of the bug report makes it easy for a developer to access the relevant logs for a bug report.

This ID can be exposed to the user so that they can reference it if they have to create a bug report or contact user support.

As part of facilitating the creation of high-quality bug reports, we must keep in mind what are the typical problems we encounter with bug reports:

- Vague wording

- Lack of steps to reproduce

- No expected outcome

- Ambiguous actual outcome

- Missing attachments

- Misleading information

Regardless of how well we craft the bug reporting mechanism,

there will be times when we will have to deal with problematic bug reports.

Normally the biggest problem is the lack of instructions to replicate the bug.

In most cases, the first step is to reach back to the bug reporter and get more clarifying information.

Many bug reports can be clarified with a little effort and provide a good experience to our users.

Keep in mind that many users might use a different vocabulary than what developers might use, and asking for clarification should be done in an open-minded way.

Sometimes it might not be possible to get enough information to create an actionable ticket,

and in such cases, it's reasonable to close with "Can't reproduce" or the equivalent status in your issue tracking software.

When dealing with bug reports, especially ones coming from users, remember that there is normally some level of frustration from the user.

We must be objective and fair.

Always keep in mind the ultimate goal is to have better software.

- What information do we need to have useful bug reports?

- How are we going to gather this information?

Prioritizing Bug Reports

Once we have created a high-quality actionable ticket based on a bug report, we have to determine how to act on it.

Should a developer drop everything they're doing and fix it?

Should it be an all-hands-on-deck trying to fix it? Even other teams?

Can it just go into our backlog for the next sprint?

As a Senior Developer, you should have the context to answer this. However, we can different frameworks to have a more structured and objective mechanism.

For example, a probability impact risk matrix can be used as a reference to prioritize a bug ticket. Using such a matrix, we consider how many users are potentially affected, and how severe the impact is for those that are affected.

If we had five priorities ("Highest", "High", "Medium", "Low", and "Lowest") we could create a risk matrix to determine the priority for a particular bug.

| Negligible | Minor | Moderate | Significant | Severe | |

|---|---|---|---|---|---|

| Very Likely | Low | Medium | High | Highest | Highest |

| Likely | Very Low | Low | Medium | High | Highest |

| Probable | Very Low | Low | Medium | High | High |

| Unlikely | Very Low | Low | Low | Medium | High |

| Very Unlikely | Very Low | Very Low | Low | Medium | Medium |

A word of caution with the risk matrix: Most people tend to classify every bug at either high or low extreme, reducing the usefulness of the matrix.

There are other mechanisms that can help you prioritize bug reports:

- Data Loss: Any bug that causes data loss will have the highest priority.

- SLO impact: If you have well-established SLOs (Service Level Objects), you can prioritize a bug depending on the impact it has on the SLO and Error Budget. We into detail about SLOs and Error budgets in Chapter 10.

Tools Referenced

- PlantUML Open-source tool allowing users to create diagrams from a plain text language. PlantUML makes it very easy to generate sequence diagrams, as well as a plethora of other diagram types. Many of the diagrams in this book are rendered using PlantUML. PlantUML can be run locally or you can use the hosted service.

Videos

Identifying and Analyzing User Requirements

Identifying and Analyzing User Requirements, continued

Foundation of a Software Application

Why is This Important?

We don't always get the privilege to build an application from scratch. But when we do, creating a solid foundation is one of the most important tasks to ensure the success of the project. In this section, we go into details

Decisions, Decisions

As a Sr. Software Developer, we have to constantly make decisions, from little details to very big transcendental choices that will have ramifications for years to come.

In the introduction of the book, we discussed how some of the decisions we have to make can have transcendental implications for an organization. With this in mind, we can start thinking about the specific decisions we have to make to get a software project started from scratch. In the context of this book, this software project can take different shapes. It can be a back-end service that our organization will host, a software product that our customers will run, a shared component meant to be consumed by other projects, etc.

These basic decisions include:

- Programming language

- Language version to target

- Frameworks to adopt

- Libraries to use

- Where to store the code

- Number of code repositories and their structure

- The structure of the code

A lot of these are already defined in large organizations, so we always need to take that context into account. Nonetheless, we're going to go through the different decisions in detail in the following sections.

Choosing the Language and Language Version

When faced with a new project, many developers want to dive right in and start writing code.

But before we print that first Hello World!, we need to know which programming language we're going to use.

Many times this will be obvious, but we're still going to explore the process through which we arrive at a decision.

To choose the language and the specific language version, it's important to understand the constraints under which we're operating.

- What language or languages does our team know?

- What languages does our organization support?

- If our code is going to be shipped to customers, what languages do our customers favor?

- Do we have required language-specific dependencies?

Languages Within the Team

Most teams are assembled around a particular skill set, with language familiarity being one of the primary skills considered. Some team members will be motivated to try and learn new languages, while other team members would rather specialize in one primary language and would rather not fork to learn a new language.

Understanding the skillset (and willingness to learn new ones) of our team is the first step to making the decision regarding which language to use.

With that said, some shifts are relatively minor and might be more feasible, in particular when we're shifting to a related language.

The smallest shift is when we upgrade the major version of our language. For example, upgrading from Python 2 to Python 3 is a small but significant change. The syntax is similar, but not exactly equal, and many of the third-party libraries are not compatible from 2 to 3.

A bigger shift is when we move to a related language in the same family. For example, a Java team could make a jump Kotlin with relatively little trouble. Kotlin is heavily influenced by Java, runs on the same JVM (Java Virtual Machine), can interoperate with Java, has the same threading model, uses the same dependency management mechanism, etc. Both languages share the same programming paradigm and ecosystem, and their syntax is not that dissimilar.

Similarly, moving from JavaScript to TypeScript is a much smaller shift since they share most of the syntax and the same programming paradigm and ecosystem.

Bigger jumps, for example from Java to JavaScript or TypeScript, need to be very well supported. These changes must be very well supported, since they require not only learning a new syntax, but also a new ecosystem, and potentially a new programming paradigm.

Languages Within the Organization

The popularization of container-based workflows (Docker containers in particular) has opened up the gates for organizations to be able to support a lot more different languages. Suddenly we can support more runtimes, more heterogeneous build pipelines, etc. However, just because one can build it and run it, doesn't mean that the organization is fully set up to support a new language or even a different version of a language.

We need to ensure that the language we choose is supported by the different infrastructure that plays a part in the wider SDLC:

- Artifact registries

- Security scanners

- Monitoring tools

In the same sense, it might be the organization itself forcing the change, because they're dropping support for our language of choice (or a particular version).

Languages and External Support

As we're choosing our language we must also understand if there are external constraints that we need to satisfy.

Some of these constraints might be hard. For example, we might have a hard dependency on a close source library that is only supported in a particular language, or maybe even a particular version of a language. In such cases, we must decide within those constraints.

In other cases, constraints might be softer. If our software is going to be shipped to external users packaged as an application, there might be pushback to change the runtime. For example, there might be pushback to require our users to change their Java Runtime Environment. In these cases, there might be valid reasons to push our users to make those changes, even against any pushback. A runtime reaching end-of-life is a very obvious one, but providing new features or better performance might make forcing the change more appealing.

Language Features

Once we have taken into account the constraints in which we can select the language based on the area that excites us developers: features!. What language provides the features that will make it easier to write the best software?

Do we want an object-oriented language like Java? or a more multi-purpose language that supports both an object and procedural approach like Python?

Does a particular runtime provide a better performance for our particular use case? What threading model works best for us? In Chapter 11 we take a look at the difference in threading models for the JVM, NodeJS, and Python.

Which ecosystem has the libraries and frameworks that will help us the most? For example, in the area of Data Science, Python has a very rich ecosystem.

And within one particular language, do we want to target a particular minimum version to get any new features that will make our lives easier?

- What language are we going to use?

- What version of the language are we going to target?

Choosing the Framework

Once we decide on a particular language to target, we move up to the next level of the foundation. In modern software development, we rarely build things from scratch, but rather leverage frameworks that provide a lot of core functionality so that we can focus on the functionality that will differentiate our software.

In choosing the framework we have to consider what services it provides and contrast that with the services that our application will need.

There are many different frameworks and many types of frameworks that provide different functionality. For example:

Many different types of frameworks:

- Dependency Injection

- Model View Controller (MVC)

- RESTful and Web Services

- Persistence

- Messaging and Enterprise Integration Patterns (EIP)

- Batching and Scheduling

Some frameworks will cover multiple areas, but many times we still have to mix and match. To select a framework or frameworks we need to determine the functionality that our application will use, and then select the

This must be done under the same constraints that we used to select our language:

- Knowledge and familiarity within our team

- Support within the company

- Compatibility with any external components with which we have a hard dependency

- If we're shipping our code to a customer, support among them

In the following sections, we're going to introduce the three frameworks that we will focus on.

Spring Boot

Spring Boot is a Java framework that packages a large number of Spring components, and provides "opinionated" defaults that minimize the configuration required.

Spring Boot is a modular framework and includes components such as:

- Spring Dependency Injection

- Spring MVC

- Spring Data (Persistence)

Many other modules can be added to provide more functionality.

Spring Boot is often used for RESTful applications, but can also be used to build other kinds of applications, such as event-driven or batch applications.

Express

Express (sometimes referred to as Express.js) is a back-end web application framework for building RESTful APIs with Node.js. As a framework oriented around web applications and web services, Express provides a robust routing mechanism, and many helpers for HTTP operations (redirection, caching, etc.)

Express doesn't provide dedicated Dependency Injection, but it can be achieved using core Javascript functionality.

Flask

Flask is a web framework for Python. It is geared toward web applications and RESTful APIs.

As a "micro-framework", Flask focuses on a very specific feature set. To fill any gaps, flask supports extensions that can add application features. Extensions exist for object-relational mappers, form validation, upload handling, and various open authentication technologies. For example, Flask benefits from being integrated with a dependency injection framework (such as the aptly named Dependency Injector).

- What features do we need from our framework or frameworks?

- What framework or frameworks are we going to use?

Other Frameworks

In this section, we introduced some of the frameworks that we're going to focus on throughout the book. We have selected these due to their popularity, but there are many other high-quality options out there to consider.

Libraries

A framework provides a lot of functionality, but there is normally a need to fill in gaps with extra libraries. Normally these extra libraries are either related to the core functional requirements or help with cross-cutting concerns.

For the core functionality, the required libraries will be based on the subject expertise of the functionality itself.

For the libraries that will provide more generic functionality, we cover cross-cutting concerns in future sections.

Version Control System

Keeping the source code secure is paramount to having a healthy development experience. Code must be stored in a Version Control System (VCS) or a Source Code Management (SCM) system. Nowadays most teams choose Git for their version control system, and it is widely considered the industry-wide de-facto standard. However, there are other modern options, both open source and proprietary, that bring some differentiation that might be important to your team. Also, depending on your organization you might be forced to use a legacy Version Control System, although this is becoming less and less frequent.

From here on out, the book will assume Git as the Version Control System.

Repository Structure

When we're getting ready to store our source code in our Version Control System, we need to make a few decisions related to how we're going to structure the code.

We might choose to have a single repository for our application, regardless of how many components (binaries, libraries) it contains. We can separate the different components in different directories while having a single place for all of the code for our application.

In some cases, it might be necessary to separate some components or modules into different repositories. This might be required in a few cases:

- Part of the code will be shared with external parties. For example, a client library will be released as open source, while the server-side code remains proprietary

- It is expected that in the future, different components will be managed by other teams and we want to completely delegate to that team when the time comes.

There's another setup that deserves special mention, and it's the monorepo concept. In this setup, the code for many projects is stored in the same repository. This setup contrasts with a polyrepo, where each application, or even each component in an application has its own repository. Monorepos have a lot of advantages, especially in large organizations, but the design and implementation of such a system is very complex, requires an organization-wide commitment, and can take months if not years. Therefore choosing a monorepo is not a decision that can be taken lightly or done in isolation.

| Pros | Cons | |

|---|---|---|

| One repo per application |

|

|

| One repo per module |

|

|

| Monorepo |

|

|

Git Branching Strategies

One mechanism that must be defined early in the development process is the Git branching strategy. This defines how and why development branches will be created, and how they will be merged back into the long-lived branches from which releases are created. We're going to focus on three different strategies that are well-defined:

GitFlow

GitFlow is a Git workflow that involves the use of feature branches and multiple primary branches.

The main or master branch holds the most recently released version of the code.

A develop branch is created off the main or master branch. Feature branches are created from develop. Once development is done in feature branches, the changes are merged back into develop.

Once develop has acquired enough features for a release (or a predetermined release date is approaching), a release branch is forked off of develop. Testing is done on this release branch. If any bugfixes are needed while testing the release branch a releasefix branch is created. Fixes must then be merged to the release branch as well as the develop branch. Once the code from the release is released, the code is merged into main or master. During the time the release is being tested, new feature releases can still occur in the develop branch.

If any fixes are needed after a release a hotfix branch is created. Fixes from the hotfix branch must be merged back into develop and main/master.

GitFlow is a heavy-weight workflow and works best for projects that are doing Semantic Versioning or Calendar Versioning.

GitHub Flow

GitHub Flow is a lightweight, branch-based workflow.

In GitHub Flow, feature branches are created from the main or master branch.

Development is done in the feature branches.

If the development of the feature branch takes a long time,

it should be refreshed from main or master regularly.

Once development is complete, a pull request is created to merge the code back into main or master.

The code that is merged into main or master should be ready to deploy. No incomplete code should be merged.

GitHub Flow works best for projects that are doing Continous Delivery (CD).

Trunk-Based

Trunk-based development is a workflow where each developer divides their work into small batches.

The developer merges their work back into the trunk branch regularly, once a day or potentially more often.

The developers must also merge changes from trunk back into their branches often, to limit the possibility of conflicts.

Trunk-based development is very similar to GitHub Flow, the main difference being that GitHub Flow has longer-lived branches with larger commits.

Trunk-based development forces a reconsideration of the scope of the tickets, to break up the work into smaller chunks that can be integrated regularly back into the trunk.

- What Version Control System will we use?

- How many repositories will our application use?

- Which branching strategy will the team use?

Project Generators

Most frameworks provide tools that make it easy to create the basic file structure. This saves the effort of having to find the right formats for the files and creating them by hand, which can be an error-prone process.

This functionality might be integrated as part of the build tools for your particular ecosystem.

For example Maven's mvn archetype:generate, Gradle's gradle init, and NPM's npm init will generate the file structure for a new project.

These tools take basic input such as the component's name, id, and version and generate the corresponding files.

Some stand-alone CLI tools provide this functionality and tend to have more features. Some examples are express-generatorhttps://expressjs.com/en/starter/generator.html, jhipster, and Spring Boot CLI.

Another option is to use a website that will build the basic file structure for your project from a very user-friendly webpage. For example Spring Initializr generates spring boot projects, and allows you to select extra modules.

Finally, your organization might provide its own generator, that will set up the basic file structure for your project, while also defining organization-specific data such as cost codes.

- What tool or template are we going to use to create the basic file structure of our component(s)?

Project Structure

The basic structure we choose for our code will depend on how our code will be used.

If we're creating a request processing application we can consider how the requests or operations will flow through the code, how the application is exposed, and what external systems or data sources we will rely on. A lot of these questions make us step into "architect" territory, but at this level, there's a lot of overlap.

The structure of our code will vary significantly if we're building a service compared to if we're building a library that will be used by other teams. How we structure the code might also vary if we're keeping our code internal, or if it's going to be made available to outside parties.

In terms of the project structure, the layout of the files should lend itself to easily navigating the source code. In this respect, the layout normally follows the architecture, with different packages for different architectural layers or cross-cutting concerns.

Given the large number of variations and caveats, we're going to focus on very basic for general purpose architecture that we detail in the next section.

Basic Backend Architecture

Code can always be refactored, but ensuring we have a sound architecture from the start will simplify development and result in higher quality. When we talk about higher-quality code, we mean not only code with fewer bugs but also code that is easier to maintain.

Applications will vary greatly, but for most backend applications, we can rely on a basic architecture that will cover most cases:

This architecture separates the code according to the way requests flow. The flow starts in the "Controllers & Endpoints" when requests are received, moving down the stack to "Business Logic" where business rules and logic are executed, and finally delegating to "Data Access Objects" that abstract and provide access to external systems.

Other classes would fall into the following categories:

- Domain Model

- Utility Classes and Functions

- Cross-cutting Concerns

In such an architecture, the file layout will look like this:

src

├───config

├───controllers

│ └───handlers

├───dao

│ └───interceptors

├───model

│ └───external

├───services

│ └───interceptors

└───utils

This structure is based on the function of each component:

config: Basic configuration for the application.controllers: Controllers and endpoints.controllers/handlers: Cross-cutting concerns that are applied to controllers or endpoints, such as authentication or error handling.dao: Data access objects that we use to communicate with downstream services such as databases.dao/interceptors: Cross-cutting concerns that are applied to the data access objects, such as caching and retry logic.model: Domain object models. The classes that represent our business entities.model/external: Represents the entities that are used by users to call our service. These are part of the contract we provide, so any changes must be carefully considered.services: The business logic layer. Services encapsulate any business rules, as well as orchestrating the calls to downstream services.services/interceptors: Cross-cutting concerns that are applied to the services, such as caching or logging.utils: Utilities that are accessed statically from other multiple components.

This file structure is only provided as an example. Different names and layouts can be used as needed, as long as they allow the team to navigate the source with ease. There will normally be a parallel folder with the same structure for the unit tests.

Controllers and Endpoints

Controllers and Endpoints comprise the topmost layer, These are the components that initiate operations for our backend service. These components are normally one of the following:

- The REST or API endpoint listening for HTTP traffic.

- The

Controllerwhen talking about MVC (Model View Controller) applications. - The Listener for event-driven applications.

The exact functionality of the component depends on how the type of application, but its main purpose is to handle the boundary between our application and the user or system that is invoking our service.

For example, for HTTP-based services (web services, MVC applications, etc), in this layer we would:

- Define the URL that the endpoint will respond to

- Receive the request from the caller

- Do basic formatting or translation

- Call the corresponding Business Logic

- Send the response back to the caller

For event-driven applications, the components in the layer would:

- Define the source of the events

- Poll for new events (or receive the events in a pull setup)

- Manage the prefetch queue

- Do basic formatting or translation

- Call the corresponding Business Logic

- Send the acknowledgment (or negative acknowledgment) back to the event source

In both the HTTP and the event-driven services, most of this functionality is already provided by the framework, and we just need to configure it.

There is one more case that belongs on this layer, and it relates to scheduled jobs. For services that operate on a schedule, the topmost layer is the scheduler. The scheduler handles the timing of the invocation as well as the target method within the Business Logic layer that will invoked. In such cases, we can also leverage a framework that will provide a scheduler functionality.

Business Logic

The middle layer is where our "Business Logic" lives.

This includes any complicated algorithm, business rules, etc.

It is in this layer that the orchestration of any downstream calls occurs.

The components in this layer are normally called Services.

This layer should be abstracted away from the nuanced handling of I/O.

This separation makes it easier for us to mock upstream and downstream components and easily create unit tests.

It is in this layer where the expertise of business analysts and subject matter experts is most important, and their input is critical.

Involving them in the design process, and working with them to validate the test cases. We go into detail about specific techniques in Chapter 5.

Data Access Objects

The bottom-most layer provides a thin layer to access downstream services. Some of these downstream services can be:

- Databases: If our service needs to read and write data from a database.

- Web Services: Our service might need to talk to other services over a mechanism such as REST or gRPC.

- Publishing Messages: Sometimes our application might need to send a message, through a message broker or even an email through SMTP (Simple Mail Transfer Protocol).

Using a Data Access Object helps to encapsulate the complexity of dealing with the external system, such as managing connections, handling exceptions and retries, and marshaling and unmarshalling messages.

The objective of separating the data access code into a separate is to make it easier to test the code (in particular the business logic code). This level of abstraction also makes it easier to provide a different implementation at runtime, for example, to talk to an in-memory database rather than to an external database.

In a few cases, there might not be a need for a Data Access Object layer. For applications that rely only on the algorithms present in the Business Logic layer and don't need to communicate to downstream services, the Data Access layer is irrelevant.

Domain Model

The Domain Model is the set of objects that represent entities we use in our systems. These objects model the attributes of each object and the relationships between different objects. The Domain Model defines the entities our service is going to consume, manipulate, persist, and produce.

In some cases, it's important to separate the external model from the internal model. The external model is part of the contract used by callers. Any changes to the external model should be done with a lot of care, to prevent breaking external callers. More details on how to roll out changes to the external model can be found in Chapter 14 The internal model is only used by our application or service and can be changed as needed to support new features or optimizations.

In traditional Domain-Driven Design (DDD), the Domain Model incorporates both behavior and data. However, nowadays most behavior is extracted to the business layer, leaving the Domain Model as a very simple data structure. Nonetheless, the correct modeling of the domain objects is critical to producing maintainable code. The work of designing the Domain Model should be done leveraging the expertise of the business analysts and subject matter experts.

The Domain Model can be represented by an Entity Relationship Diagram (ERD).

Utility Classes and Functions

In this context "utility" is a catch-all for all components (classes, functions, or methods) that are used to perform common routines in all layers of our application.

These utility classes and functions should be statically accessible. Because of their wide use, it does not make sense to add them to a superclass. Likewise, utility classes are not meant to be subclassed and will be marked as such in languages that support this idiom (for example final in Java).

Cross-Cutting Concerns

Cross-cutting concerns are parts of a program that affect many other parts of the system. Extracting cross-cutting concerns has many advantages when writing or maintaining a piece of software:

- Reusability: Allows the code to be used in different parts of the service.

- Stability and reliability: Extracting the cross-cutting concern makes it easier to test, increasing the stability and reliability of our service.

- Easier extensibility: Using a framework that supports cross-cutting concerns makes it easier to extend our software in the future.

- Single responsibility pattern: It helps ensure that our different components have a single responsibility.

- SOLID and DRY: It makes it easier to follow best practices such as SOLID and DRY(Don't Repeat Yourself).

To implement the cross-cutting concerns we want to leverage our framework as much as possible. Many frameworks provide hooks that we can use to save time. However, we also want to understand how the framework implements the hooks, to be able to debug and optimize these components. We explore in more detail some of the techniques used by common frameworks in Chapter 13.

There are some disadvantages to abstracting away cross-cutting concerns, especially when relying on "convention over configuration". Some developers might have a harder time following the logic if they don't understand the framework. Also "convention over configuration" often conflicts with another software design principle: "explicit is better than implicit". As Senior Software Developers, we must balance the ease of maintaining and ease of testing with the technology and functionality we're bringing into the code base.

The design of how the cross-cutting concerns will be implemented is a vital part of the architecture of the application. These are important decisions, but one of the advantages of decoupling this functionality into separate components, is that it makes it easier to fix any shortcomings.

How cross-cutting concerns are supported varies from framework to framework. Here are some common mechanisms:

- Aspect Oriented Programming

- Filters and Middleware

- Decorators and Annotations

These mechanisms are explained in detail in Chapter 13.

In the next sections, we'll introduce some core cross-cutting concerns. Many decisions should be made for each one of these cross-cutting concerns, but those decisions are formally listed in the respective in-depth chapters, rather than the introductions from this chapter.

Logging

Logging information about the application's behavior and events can be helpful for debugging, monitoring, and analysis. Logging is a cross-cutting concern because you want to abstract away and centralize the details of how the logging is done. These details include:

- Which library should we choose?

- Where should we write to? Most libraries allow the configuration of log sinks. Depending on how our application is configured, we might want to write or append to standard output to be picked up by an external logging agent. In other cases, we'll write directly to our logging service.

- What format should we use? Most logging libraries use plain text by default, and we can configure that plain text format with a custom pattern. Depending on which logging service we're using, we might choose a structured logging format such as JSON to be able to emit extra metadata.

The actual logging of the messages is normally left up to individual methods, but an application-wide vision is needed. For example, a Senior Developer or Architect should decide if context-aware logging is going to be used, and if so what information should be recorded as part of the context. Context-aware logging is a mechanism to enrich log messages with data that might be unavailable in the scope in which the log statement executes. This is also sometimes referred to as Mapped Debugging Context. We discuss this technique in more detail in Chapter 19.

Security

Ensuring the confidentiality, integrity, and availability of data is a critical concern for many applications. This can involve implementing authentication, authorization, and encryption mechanisms.

As Senior Developers we have to decide what mechanisms are necessary, and where they should be implemented.

For example, one of the decisions that is commonly relevant for a backend service is how will users authenticate to use our application.

There are many options to consider:

- Usernames and Passwords

- Tokens (for example OAuth or JWT)

- Certificate-based authentication

- OpenId or SAML

Due to the risks involved, it's better to use an existing framework for security rather than writing our own. Many frameworks provide out-of-the-box solutions or provide hooks to support a modular approach.

For example in the Java/Spring ecosystem Spring Security provides a very feature-rich solution.

Neither Flask nor Express provides an out-of-the-box security solution but rather provides hooks into which third-party solutions can be adapted. These third-party solutions are normally applied as middleware, functions that have access to the request and response objects, and the next middleware function in the application’s request processing chain.

Where should security be implemented?

Security can be applied at different layers. However it is mostly applied at the Controller and Endpoints layers, and in the Business Logic layer less often. In general the closer to the user the better. In some cases, the authentication and authorization is offloaded from the application, and handled by an API Gateway or a Service Mesh.

Security is a very broad topic, and there are many specialized sources on the topic, however, we do talk more in detail in Chapter 9.

Caching

Caching can help us achieve two main goals, improve performance and improve reliability. Caching can improve the performance of an application by reducing the number of trips to the database or other back-end systems. Caching can also improve the reliability of an application by keeping a copy of data that can be used when downstream services are unavailable. However, caching comes with a significant amount of extra complexity that must be considered.

There are only two hard things in Computer Science: cache invalidation and naming things.

-- Phil Karlton

Where should caching happen in a back-end application?

Caching can be implemented at every layer of the application, depending on what we're trying to achieve. However, it's best to not implement caching directly on the Data Access Objects, to maintain a single responsibility.

Caches can be shared across multiple instances or even multiple applications. Caches can also be provisioned on a per-instance basis. All of these parameters determine the effects caching will have on reliability, scalability, and manageability. Caching also introduces the need to consider extra functionality, for example, to handle the invalidation of entries that are no longer valid or stale.

Caching is a complicated topic with many implications, and we just scratched the surface here. We talk more in detail in Chapter 11.

Error Handling

Error handling is often overlooked as a cross-cutting concern, but a good setup from the beginning will make the application more maintainable and provide a better user experience in the worst moments. First and foremost, error handling is about trying to recover from the error if at all possible. How we do this depends greatly on our application and the type of error.

However, error handling is also about handling cases when we can't recover from the error. This entails returning useful information to the user while ensuring we don't leak private or sensitive information. Approaching error handling as a cross-cutting concern can help us simplify our code while providing a homogeneous experience for our users, by allowing us to handle errors regardless of where they occur in the application.

As part of the recurring theme, we should leverage our framework to simplify the work of handling errors. Our framework can help us map exceptions to HTTP return codes. The framework can help us select the proper view template to render user-readable error messages. Finally, most frameworks will provide default error pages for any exception not handled anywhere else.

For example, in a Spring-based application we can use an HandlerExceptionResolver

and its subclasses ExceptionHandlerExceptionResolver,

DefaultHandlerExceptionResolver,

and ResponseStatusExceptionResolver.

Express provides a simple yet flexible error handling mechanism, with a default error handler and hook to write custom error handlers.

By default, Flask handles errors and returns the appropriate HTTP error code. Flask also provides a mechanism to register custom error handlers.

If we refer to the core architecture we proposed, where should error handling be done?

Normally we want to handle unrecoverable errors as close to the user as possible. We do this have bubbling up exceptions up the stack until they are caught by an Exception Handler and properly dealt with. If we're talking about recoverable errors, we need to handle them in the component that knows how to recover from them, for example by retrying the transaction or using a circuit breaker pattern to fall back to a secondary system.

More detail on error handling as a cross-cutting concern can be found in (Chapter 12)[./chapter_12.md#error-handling].

Transactions

Transactions ensure that changes to data are either fully committed or fully rolled back in the event of an error, which helps maintain data consistency. Given the rise of NoSQL databases, transactions are not as widely used, but they're still very relevant when working with a traditional RDBMS (relational database management system).

Once we have determined the data sources that we will be interacting with, we have to determine if they support transactions. For data sources that don't support transactions, we have to think of other ways to reconcile failed updates.

Transactions can encompass a single data source or multiple data sources. If we have transactions across multiple data sources, we have to determine if they support a global transaction mechanism such as XA.

If we're going to use transactions, we need to select a transaction manager and integrate it into our application. If we're using a transaction manager, we must also decide how we're going to control the transactions. Transactions are either controlled programmatically or declaratively. Programmatic transactions are explicitly stated and committed. Declarative transactions are delineated by the transaction manager based on our desired behavior as indicated by special annotations (such as Transactional in Java).

Where should transaction control happen?

Transaction control normally happens in the Business Logic layer, or the gap between the Business Logic layer and the Data Access Objects layer. It is generally a bad idea to control transactions from the Controller and Endpoints layer since it would require the caller to know something about the internal workings of the lower layers, breaking the abstraction (also known as a leaky abstraction).

More details on transactions can be found in (Chapter 16)[./chapter_16.md#transactions].

Internationalization or Localization